Ann Johnson, a former math and P.E. teacher, suffered a debilitating stroke in 2005 that left her paralyzed and unable to speak. She was diagnosed with locked-in syndrome (LIS), a rare neurological disorder that causes complete paralysis except for eye movement control. Despite her physical limitations, Johnson maintained her cognitive abilities and wrote a paper about her experiences with LIS. In 2021, she was selected as one of eight participants for a groundbreaking clinical trial at the University of California, San Francisco (UCSF). The trial aimed to give individuals like Johnson the ability to communicate using brain signals.

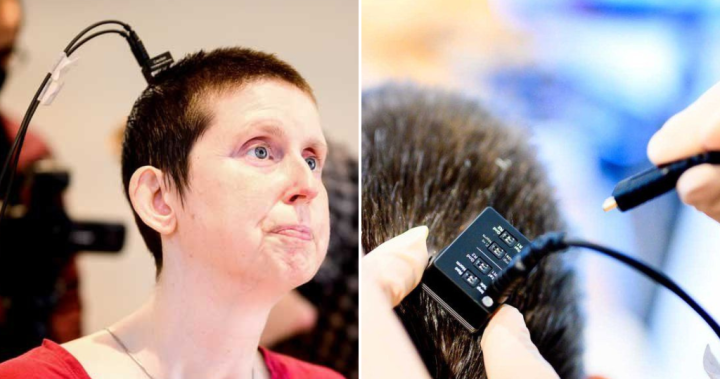

The results of Johnson’s participation in the trial have been published in the prestigious journal Nature. It was revealed that she is the first person in the world to speak out loud using decoded brain signals. A brain implant records Johnson’s neurological activity, which is then translated into words by an artificial intelligence (AI) model. The decoded text is then synthesized into speech by a digital avatar that can mimic Johnson’s facial expressions. This cutting-edge system has significantly improved Johnson’s communication abilities, allowing her to speak at a rate of nearly 80 words per minute, compared to just 14 words per minute with her previous communication device.

The breakthrough was showcased in a video released by UCSF, where Johnson spoke to her husband for the first time using her own voice. The researchers behind this brain-computer interface technology hope to obtain regulatory approval to make it accessible to the public. Their ultimate goal is to restore natural and embodied communication for individuals like Johnson.

The technology works by surgically implanting a grid of electrodes onto Johnson’s brain surface, specifically targeting areas involved in speech. These electrodes intercept the brain signals meant for the muscles used in speech production. The signals are then transferred to a port on the outside of Johnson’s head, where they are decoded by computers and synthesized into speech. The AI model interprets Johnson’s brain activity to generate her voice and facial expressions.

To train the AI model, Johnson worked closely with the research team for several weeks. They repeated phrases from a set of 1,024 words to help the AI recognize Johnson’s unique brain signals associated with each phoneme. With less than two weeks of training, the AI model reached high accuracy and performance levels.

Johnson is still getting used to hearing her old voice again, as the AI model was trained using a recording of her speech on her wedding day. The next steps for the researchers involve developing a wireless version of the system to enhance convenience and mobility for individuals like Johnson.

Participating in the brain-computer interface study has given Johnson a newfound sense of purpose and fulfillment. Despite the challenges she has faced due to her stroke, she feels like she is contributing to society and has a job again. Johnson’s inspiring journey serves as a remarkable example of resilience and the power of groundbreaking medical research.

Denial of responsibility! VigourTimes is an automatic aggregator of Global media. In each content, the hyperlink to the primary source is specified. All trademarks belong to their rightful owners, and all materials to their authors. For any complaint, please reach us at – [email protected]. We will take necessary action within 24 hours.