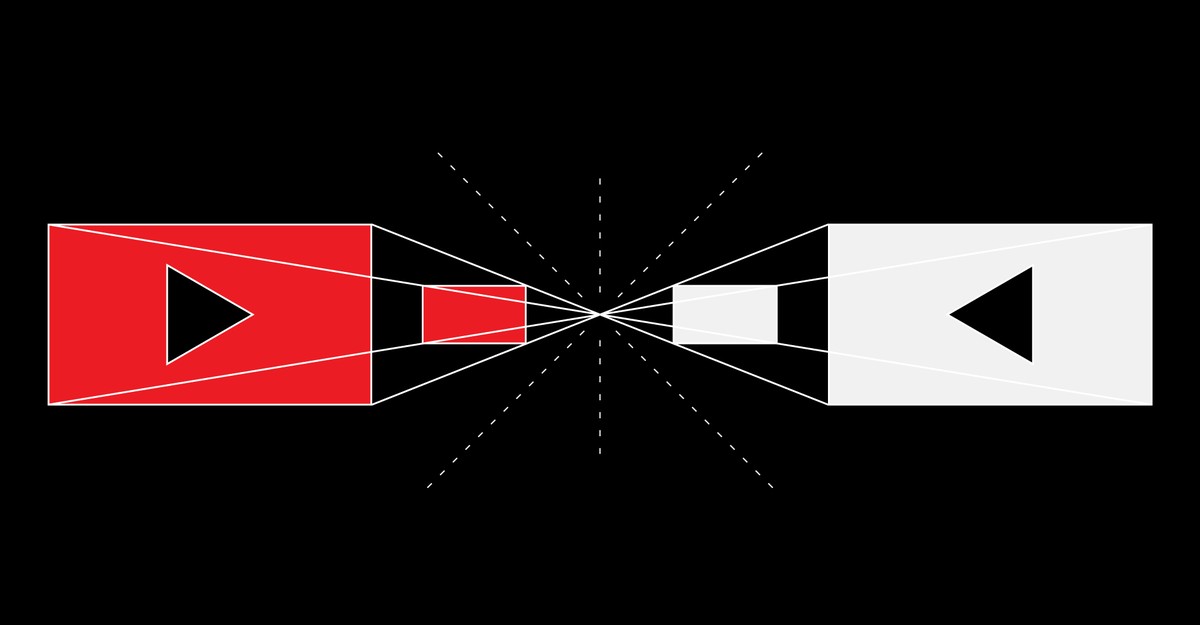

During the 2016 election, YouTube became known as a hub for the alt-right and conspiracy theorists. With over a billion users, YouTube was home to charismatic personalities who had developed strong connections with their audiences, making it a potential political influencer. One prominent figure was Alex Jones, whose channel Infowars had over 2 million subscribers. YouTube’s recommendation algorithm, which determined what users watched, seemed to be leading them into dangerous conspiracies and extremist ideologies. This process of “falling down the rabbit hole” was documented by individuals who found themselves captivated and persuaded by extremist rhetoric. Even individuals not actively seeking extreme content could be influenced by the algorithm, deepening their worst impulses and trapping them in an echo chamber.

At the time, YouTube denied any rabbit-hole problem, but made changes to address criticisms. In early 2019, YouTube announced adjustments to its recommendation system to reduce the promotion of harmful misinformation and borderline content. It also demonetized channels that violated its hate speech policies. The goal was to fill in the rabbit hole.

A peer-reviewed study published in Science Advances today suggests that YouTube’s 2019 update was effective. Led by Brendan Nyhan, a government professor at Dartmouth, the study surveyed 1,181 participants about their political attitudes and monitored their YouTube activity and recommendations for several months in 2020. The study found that only 6% of participants watched extremist videos, and the majority had intentionally subscribed to at least one extremist channel. Furthermore, most individuals found extremist videos through external links rather than YouTube recommendations.

Contrary to popular belief, the study revealed that individuals with high levels of gender and racial resentment actively sought out extremist content rather than being unknowingly funneled towards it. This finding is unique as previous studies have relied on simulated experiences using bots, making this study the first to capture real human behavior. However, the study did have limitations as it could not account for YouTube activities prior to 2020.

Although this study offers valuable insights, it does not absolve YouTube of all responsibility. Extremist content still exists on the platform, and some individuals continue to watch it. The study simply shows that encountering extremist content through YouTube recommendations is currently rare. The term “rabbit hole” is now defined more precisely as a recommendation leading to a more extreme video without prior subscriptions to similar content.

While this research is significant, it does not provide a complete picture of YouTube’s past. Understanding YouTube’s historical impact is challenging, and it requires examining the platform from multiple angles. The ever-evolving nature of the internet means that as one mystery is solved, another emerges. It is crucial to continue studying the effects of technology and information consumption in order to comprehend the polarizing landscape of today.

Denial of responsibility! VigourTimes is an automatic aggregator of Global media. In each content, the hyperlink to the primary source is specified. All trademarks belong to their rightful owners, and all materials to their authors. For any complaint, please reach us at – [email protected]. We will take necessary action within 24 hours.