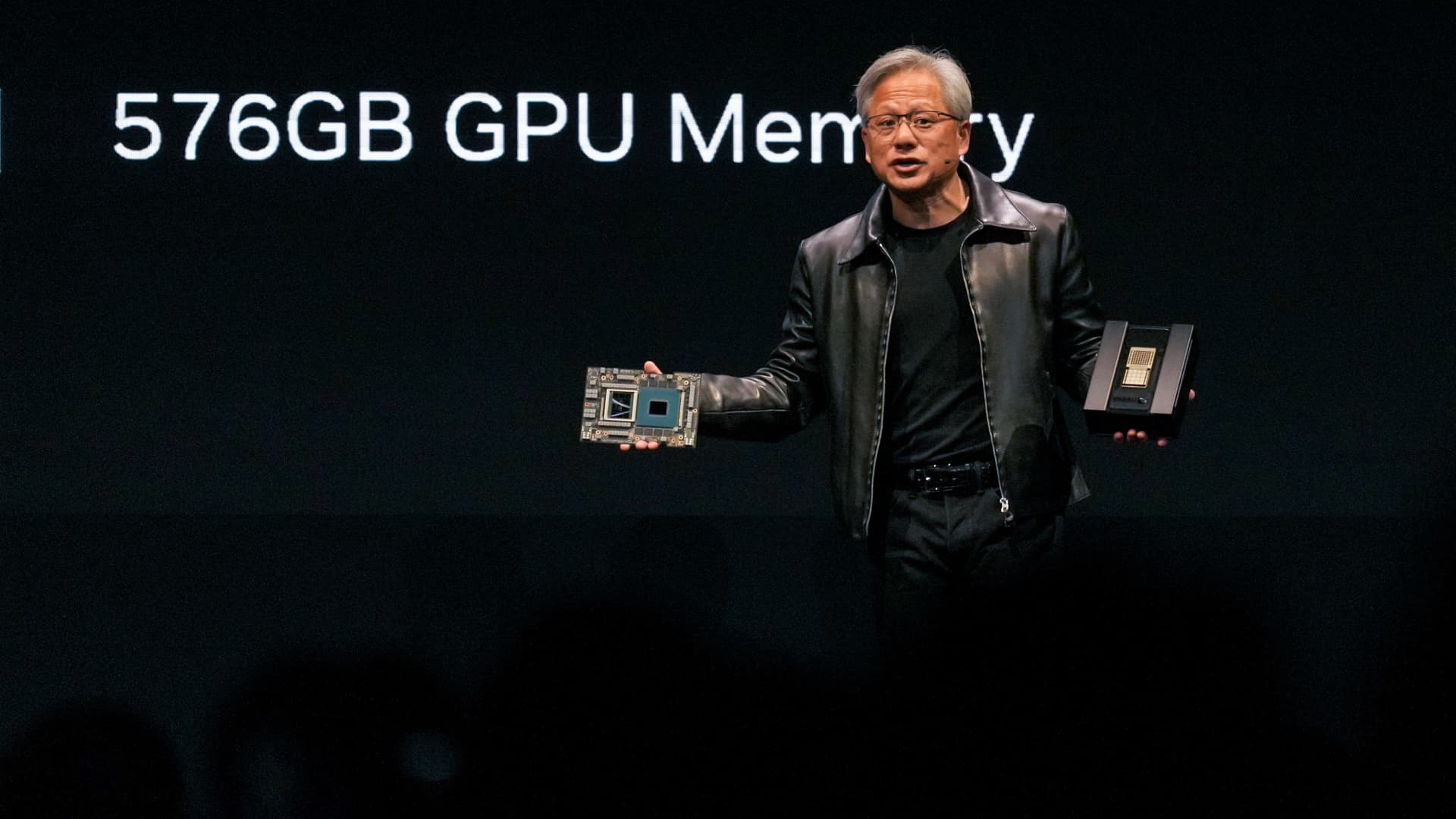

Nvidia president and CEO Jensen Huang delivered an intriguing speech at the COMPUTEX forum in Taiwan, stating that “Everyone is a programmer. Now, you just have to say something to the computer.”

Sopa Images | Lightrocket | Getty Images

Nvidia has unveiled its latest AI chip, the GH200, in a bid to maintain its dominance in the AI hardware market against competitors like AMD, Google, and Amazon.

Currently, Nvidia holds more than 80% market share in AI chips, as reported by various sources. The company’s GPUs have become the go-to chips for the complex AI models required by innovative applications such as Google’s Bard and OpenAI’s ChatGPT. However, Nvidia has been struggling to meet the growing demand for its chips as numerous tech giants, cloud providers, and startups seek GPU capacity to develop their own AI models.

The new GH200 chip from Nvidia is equipped with the same GPU as their highest-end AI chip, the H100. However, the GH200 offers a significant boost with 141 gigabytes of cutting-edge memory and a 72-core ARM central processor. Nvidia CEO Jensen Huang described the GH200 as a processor specifically designed for the scaling of data centers worldwide.

Huang announced that the GH200 will be available through Nvidia’s distributors in the second quarter of next year and should be available for sampling by the end of this year. No pricing details have been disclosed yet.

In the AI model development process, tasks are typically divided into two stages: training and inference. During training, a model is trained using massive amounts of data, which can take months and requires powerful GPUs like Nvidia’s H100 and A100 chips. Inference, on the other hand, involves the utilization of the trained model in software for making predictions or generating content. Inference is computationally demanding and requires substantial processing power each time the software runs, making it crucial to have efficient hardware for optimized performance.

Nvidia’s GH200 chip is specifically designed for inference, boasting larger memory capacity to accommodate larger AI models on a single system. During a call with analysts and reporters, Nvidia VP Ian Buck explained that the GH200 has 141GB of memory, compared to the 80GB offered by the H100. Furthermore, Nvidia also unveiled a system that combines two GH200 chips into a single computer, enabling even larger models.

Notably, Nvidia’s announcement comes in the wake of its primary GPU competitor, AMD, introducing its own AI-oriented chip, the MI300X, which supports 192GB of memory and is heavily marketed for its AI inference capabilities. Additionally, tech giants like Google and Amazon are also developing their own custom AI chips for inference purposes.

Denial of responsibility! VigourTimes is an automatic aggregator of Global media. In each content, the hyperlink to the primary source is specified. All trademarks belong to their rightful owners, and all materials to their authors. For any complaint, please reach us at – [email protected]. We will take necessary action within 24 hours.